Table of contents

No headings in the article.

The Google File System(GFS) is a distributed file system created by google for large distributed data-intensive applications. Unlike HDFS (Hadoop Distributed File System) which is open source, GFS is a proprietary system. Both these and other distributed systems out there share the same goals of achieving scalability, availability, reliability, and performance but the GFS is designed by observing the various applications(current, past, and anticipated which deal with large amounts of data and their environment) and discarding certain design assumptions that hold true for other distributed systems.

Design Assumptions : While designing GFS, several important assumptions were made by observing their current and anticipated systems. These assumptions serve both as challenges and opportunity that GFS has to tackle:

- The system is composed of inexpensive components that will often fail.

- The system stores and deals with large files, often from certain MBs to several GBs, made up of 64mb chunks.

- Read and write workload - Large streaming reads and large sequential writes to small random reads.

- GFS will process large data simultaneously and so it should offer high bandwidth to achieve this over low latency.

Architecture:

A typical GFS cluster consists of (as shown in the above image) :

- A single GFS master - maintains all the file-system metadata(file and chunk namespace, mapping from file to chunks, and location of chunk replica) which is stored in-memory

- Multiple GFS chunk servers - contains chunks(64MB) of data files

- GFS clients - These read and write into data files/chunks of data files.

All of the components are commodity Linux machines. GFS master is never involved in read/write operations w.r.t chunks of data files and separating the meta-data from chunks avoids the creation of bottle-neck. The communication happens in a below manner:

- GFS clients ask GFS master for a particular chuck location.

- GFS master returns a list of chuck handles and locations.

- GFS clients store the list in their cache and then communicate with the nearest chunk server to perform read/write. As the list is available on cache they can now work independently of the GFS master.

Operational log: This is an important central log to GFS. Every metadata change are sequentially logged in this file and checkpoints are maintained. In case of any GFS master crash, it recovers from the last checkpoint maintained in the operational log. As this log is important and maintains the meta-data checkpoints it is replicated in multiple remote machines.

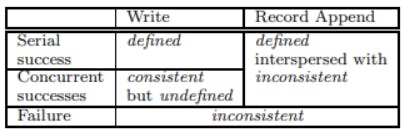

Consistency Model : The consistency model in GFS is relatively simple and flexible. Following the changes in the chunks, the data in them can be in three states:

- Undefined: Different GFS clients see different data

- Consistent/Defined: GFS clients see the same data irrespective of the chuckserver they read form

- In-consistent: In case of read/write failure, the state of the operation is considered to be in-consistent

System Interactions:

- Leases and Mutation: A GFS master is not involved in data manipulation of a file and so it transfers this to one of the chuck servers/replicas and this is done by assigning a lease to a replica for a particular chunk. And once this lease is assigned, the data in the chunk can be mutated/manipulated by the GFS clients.

- Data Flow : Once a lease is assigned, the interaction of GFS clients with chunk servers to mutate the data consists of data flow.

- Snapshot: This feature is used to make an instantaneous copy of file or large datasets or as a checkpoint of the current state before performing any new changes which they could either commit or rollback to this snapshot.

There are various operations of Master as well, notable ones include garbage collection and locking. All in all this design has helped Google to effectively store and process big data. Their main features of lazy space allocation and garbage collection and making sure that the master only handles the meta-data making it simple as well as efficient.

- Publication: ACM SIGOPS Operating Systems Review, Volume 37, Issue 5

- Published date: December 2003